Member-only story

System-Level — DeepSeek’s Game-Changing Innovations

Explore how DeepSeek is transforming AI with memory-efficient attention mechanisms, dynamic training strategies, and practical industrial applications, pushing boundaries in real-world AI performance.

Disclosure: I use GPT search to collection facts. The entire article is drafted by me.

Let’s talk about DeepSeek — it’s not your everyday AI buzzword, but a series of really cool breakthroughs reshaping how we process and analyze huge amounts of data.

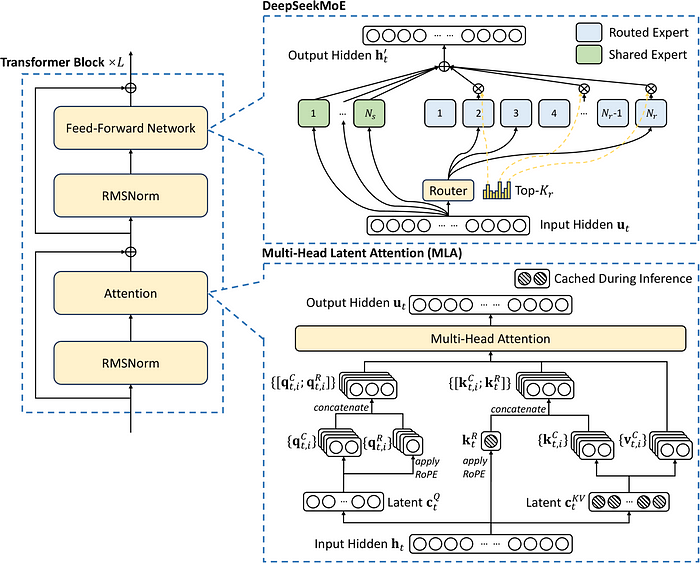

Multi-head Latent Attention

Transformers operate by processing input tokens through attention mechanisms that generate “query,” “key,” and “value” vectors. While effective, this method results in a massive accumulation of Key-Value (KV) caches, particularly for lengthy sequences — a problem that strains GPU resources.